Delay your decisions

I design very little upfront. Sometimes I need to make an estimation, I design a little more. Sometimes the project is hardly specced and there is a lot of exploring to do.

In this post I would like to give a demonstration of how a recent project developed.

Initial stage

Client: “We want a fancier frontend for our data”.

Me: “Sure, any limitations?”

Client: “We give you 4 months”

Me: “Can I use any tech I want?”

Client: “Na, we want to be able to continue on what you’re going to create, so please use P, X, Y and/or Z.”

Me: “Sure, P is not my favourite, but some time ago I’ve dealt with it, so ok, we keep it simple anyway.”

The plan that followed:

Initially I thought I could fancy up the CSS, but the old product was in a very bad state and not really maintainable. So I decided to create a thin API layer in P (yes of PHP), a modern front-end layer in JavaScript using a framework that I co-introduced in the organisation a few years ago (React). I quickly came up with the idea of a front-end pulling a configuration from the API, and then pull in all the bits of information required to build the screen in response to that query. No GraphQL; it had to be kept simple at the server side, so simple GET requests.

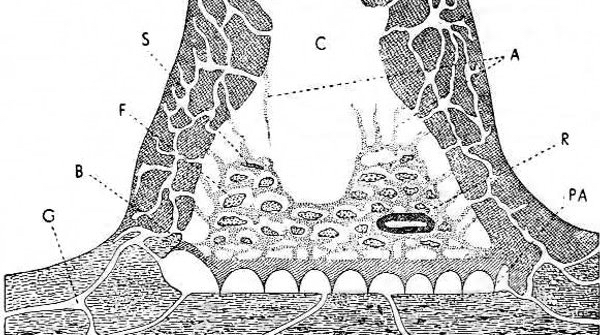

Structure of a nest of Macrotermes natalensis HAV (Public Domain Image, via Wikipedia). Termites in general have often been studied for their collaborative behaviour.

The elephant in the room:

Client: “we don’t know about authentication yet.”

Me: “ok. we fix that later”

Adding authentication

Everything was written as a public API. While there was no strictly confidential information involved it was uncertain whether we were going to continue with the proprietary authentication layer they were using for the old project, running at as a plugin at the webserver (which introduced quite some UI issues), or move to another system.

Choosing Keycloak

I picked up signals about a plan to use a new central OAuth based authentication system, Keycloak. Work had started on it, but no central deployment was available yet. The proprietary system, however, turned out to be basically End-Of-Life. I endorsed adopting the central OAuth based system for this project and we were able to get some additional development hours from the internal development team to get it started; and I was able to set up a running instance.

Side note: As of now, almost a year later, there still isn’t a central OAuth system, but the test-instance has been duplicated on production systems. I wouldn’t say we made the wrong bet. But pushing new technology in large organisations can be hard, especially when authentication is involved.

New requirement: deployments

Client: “we want to do the deployments”

Me: “Sure, I’ve created ansible scripts from the start, ‘cause I already had to sync 2 server-setups, that are kind of hard to maintain. Just tell me where to put the ansible scripts.”

Client: “Silence”

Me: “I’ll put them into this old private repository you have, for internal use only, anyone should be able to access them”

The to-be deployers: “Let’s see… “

Me: “This ain’t gonna work, but I’ve documented the hell out of it”

Me: “We need a CI (Continues Integration)”

Other devs @ client: “We have multiple, but no standards”

Side note: In my safe ruby-space, Capistrano solves 90% of my deployment reproducibility issues, sadly for me no ruby at this org, but some people at this org were using ansible, so that’s what I went for. Currently they’re however migrating to mostly Docker / Kubernetes, with some Kubernetes support.

Gitlab

There was basically no place where to put executable code for a deployment. However, Gitlab.com was gaining traction within the organisation, besides the Gitlab adoption we had pushed a few years earlier. And compared to Jenkins, TeamCity and Hudson, Gitlab actually seemed reasonably user friendly. And offered integrated CI, in contrast to Github, which required setting up something like TravisCI.

One of the internal developers introduced me to running self-hosted task runners, which allowed us to deploy apps from within their secure environment.

I can really recommend with Gitlab.

The adjustment

Client: “We really want an editor, editing text files probably won’t do”

Me: “Sure, I was kind of thinking the same…”

Initially I was thinking of creating some YAML based configuration per client. Those would be edited and stored in the same project as the git server. Until then I’d been building a tree of objects and created a JSON response for this tree using PHP. But that wouldn’t work, things had grown too complicated…

The first option I considered was an option within the front-end tooling I was using; essentially a state editor. This state would then be stored server side. I tried to create a Proof of Concept (POC), but I didn’t get up to speed fast.

Hence I arrived at another idea: wouldn’t there be some standard JSON-editor? Turned out there was something better: a JSON-schema based editor, allowing you to define structure and constraints of a JSON file to be.

Choosing JSON Schema

The line of thinking was: I’m already using JSON for the client to parse; if we could write down the rules for that JSON. Maybe even in a standard format. Maybe, maybe we’ll be able to find standard editors obeying these rules.

While I initially rejected the idea, since a generic interface will never be as good as a specific interface, time was not on our side.

The format: JSON Schema

The editor: JSON-editor

But what about storage?

While the Keycloak-instance required a database, I didn’t use one for any of the API’s, which was a growing set of GET calls processing raw data on the fly (maybe to be cached when needed in the future). So the new config files?

Git for storage

Realising that git was already used for storage, I wrote a thin wrapper around git for managing JSON files on a dedicated repository. The dedicated repository would act as a line of defence against unwanted changes the the other source code. The deployment script would merge the repo’s together.

Where we are now

12 months ago I started, basically from scratch, now I’m now managing 5 git repositories:

- The server

- The client

- The deployment scripts (initially hosted in an internal Mercurial repository)

- The JSON-editor based admin client

- The configuration files

After only 2 months we had a running demo, with real data, satisfying a then complaining client. Within 4 months we had an application running in production, satisfying another client and delivering more data to our end-users, using different styles of graphing, and several interactive map-based visualisations.

After those 4 months of near full-time labour we continually added small improvements. Adding new data sources, the editor was developed, and I further documented the workings to allow them to add clients and data sources without external assistance.

The great take away: delay your decisions

While heavily demand driven, I’m quite proud of the current state of the project. By not making budget driven choices up front, but delaying the decisions and making them in situ with the constraints of that moment it was and is possible to deliver visible progress to the end-user, while slowly improving long-term sustainability.